SA public service warned about ChatGPT amid ethics debate

Concerns about South Australian public sector data being uploaded into artificial intelligence programs has prompted new guidelines for public servants.

Photo: Florence Lo/Reuters

The Office of the Chief Information Officer (OCIO) on May 31 issued guidelines to South Australian government departments and staff about the use of large language models, such as ChatGPT and Google Bard, for government work.

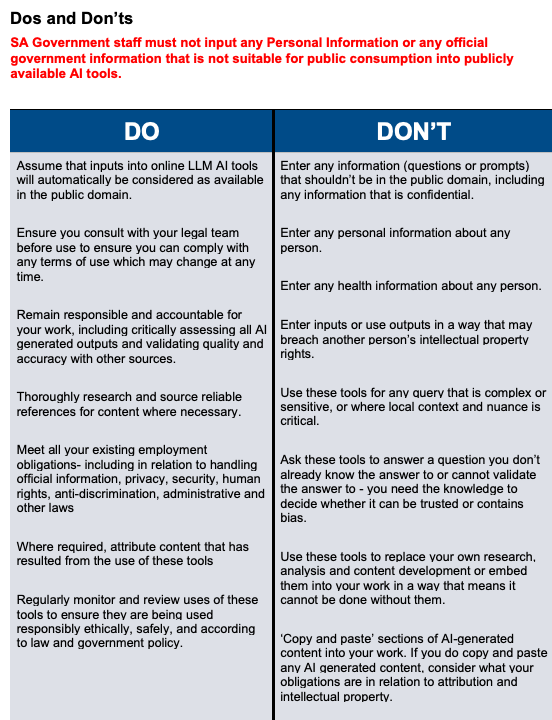

The guidelines state that South Australian government staff “must not input any personal information or any official government information that is not suitable for public consumption into publicly available AI tools”.

It further states that public servants should not give AI programs any personal or health information, nor any information that could breach another person’s intellectual property rights.

The new guidelines’ list of recommendations for the usage of artificial intelligence in the public sector.

A Department of Premier and Cabinet (DPC) spokesperson told InDaily the new AI guidelines complement the existing South Australian public sector code of ethics, which governs information handling and public servants’ responsibilities to protect official information.

“Breaches of the Code or failing to adhere to the Framework can have serious ramifications for employees, who have regular training to reinforce these requirements,” the spokesperson said.

According to the Chief Information Officer, megabytes of data are being inputted every day into ChatGPT from South Australian public sector networks.

The OCIO reported its concerns about this in a note to State Cabinet on August 21 as well as issuing the new guidelines.

But the DPC spokesperson said there is “no evidence to suggest that any sensitive information from State Government employees has been incorporated into artificial intelligence via government networks”.

The OCIO’s concerns were revealed by the state government’s Office for Data Analytics (ODA) in a submission to the newly established parliamentary inquiry into artificial intelligence.

The ODA, an office within DPC, warned that AI “threatens national security” and pointed to “reports of foreign controlled AI bots promoting unrest against and within Australia via anti-AUKUS and anti-Voice sentiment”.

“With South Australia now handling major components of the AUKUS contract and deeply involved in defence, awareness and education of AI is more important than ever before,” the ODA submitted.

The ODA also said the South Australian public sector “has only recently pivoted towards a strategic focus and position on the use of AI”.

“The prevalence and potential consequences of public sector data entering AI spaces prompted the creation of the South Australian Across Government AI Governance Working Group which met on 31 July 2023,” it said.

The AI working group, which the ODA chairs, identified “upskilling” the public service on AI awareness, use and risk as one of its primary concerns.

“The South Australian public service comprises approximately 12 per cent of the state’s workforce, representing a significant opportunity for savings and efficiencies.

“AI improves productivity from automation. It is a useful tool to overcome resource constraints and prioritise critical work.

“At the same time, users must be proficient and knowledgeable in using AI in a safe and appropriate way that does not compromise public sector data.”

The ODA said the AI working group is intended to dissolve in December with consideration to be given to establishing an advisory body “to consult on technical and policy positions by balancing ethics and risk”.

“Public sector agencies would present their AI work or project to the advisory committee, seeking endorsement or ethical direction,” the ODA said.

New AI guidelines ‘a decent effort’

Among the other recommendations in the government’s guidelines is that AI should not be used to “answer a question you don’t already know the answer to or cannot validate the answer to”.

There is also a caution against using AI for queries that are complex, sensitive or “where local context and nuance is critical”.

In its submission to the parliamentary committee, the Campaign for AI Safety, a self-described association of people who are “concerned about the dangers of AI”, recommended the South Australian Government develop AI “onboarding materials” for new staff as well as annual refresher training.

“Mostly it’s about educating public servants and making sure they understand the rules and what to do and not to do with using ChatGPT, for example,” Nik Samoylov, the campaign’s founder, told InDaily.

“Basically, the government should do what many other companies, large companies, are already doing – they’re doing training on how to use generative AI, whether to use it.”

Samoylov, who is also the founder of market research firm Conjointly which uses AI to produce automated survey summaries for clients, further warned that entering information into AI tools like ChatGPT and Bard can see that data transferred overseas.

“Sensitive data, even if it’s not used for training (the AI models), it’s travelling overseas,” he said.

“Australians’ data should not be travelling overseas without Australians knowing and consenting to it.”

Asked about the new state government guidelines, Samoylov said: “I think these guidelines are a decent effort.

“Especially if this becomes an evolving document that will be updated from time to time because the technology and legalities around it are evolving fast.”